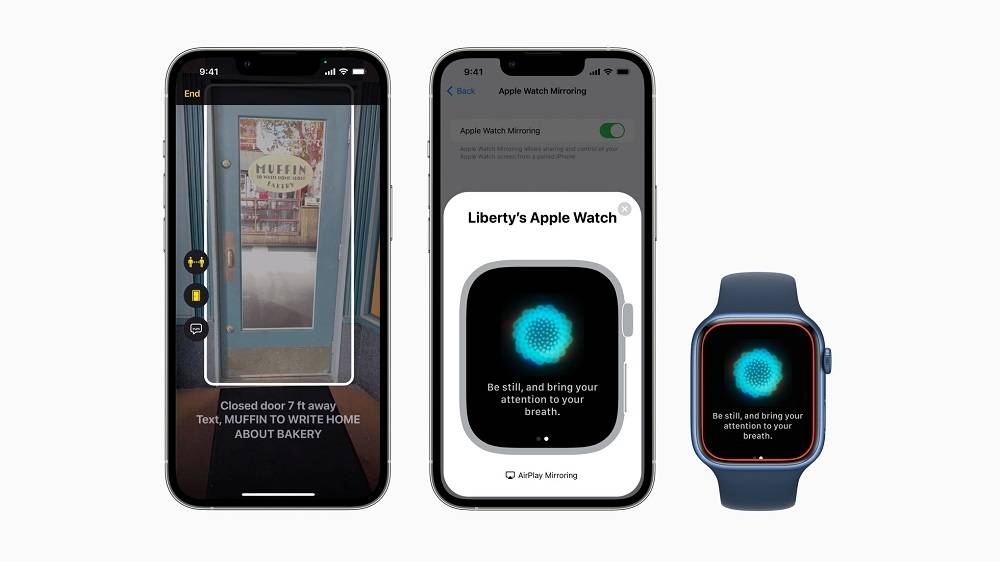

How the iPhone will help blind and low-vision users detect and open doors

SAN FRANCISCO, May 20 ― Apple has unveiled a host of new accessibility solutions for users with disabilities. These include a new feature that allows blind and visually impaired people to detect and analyse the attributes of a door, including information on how to open it.

This new mobility assistance feature is designed to help blind or low-vision users to correctly locate a door, analyse its attributes and estimate their distance from it, in order to help them navigate it properly. The system will, for example, indicate whether the door is open or closed, and whether it needs to be pushed, pulled or its handle turned in order to be opened. The system can also detect and read signs and symbols around the door, such as an office number or the name and specialty of a store.

All this is made possible by the power of LiDAR (a laser detection measurement system), found in the latest generations of iPhones, as well as the machine learning capabilities built into the device. “Door Detection” will soon be accessible via a new Detection mode within Magnifier, which is already part of the accessibility features built into iOS.

At the same time, Apple announced that Apple Watch Mirroring will make it possible to control an Apple Watch from an iPhone, using the iPhone's assistive features, such as Voice Control or Switch Control. It will also be possible to use voice commands instead of touching the smartwatch's screen. In addition, Apple is introducing new hand-gesture controls to its Watch. A double pinch, for example, will be enough, to answer a call or hang up, skip a notification, take a picture, play or pause media, etc.

Another announcement from Apple, this time aimed at assisting the deaf and hearing impaired, includes the arrival of live captions on iPhone, iPad and Mac, regardless of the audio source (phone call, FaceTime, video conference, streaming, social networks, etc.). ― ETX Studio